Recognising phishing is becoming more difficult

There are certain characteristics of phishing emails that can help to recognise cyber attacks as such. However, the criminals’ technical possibilities are developing rapidly, so that linguistic features in particular can no longer be considered indicators of phishing in the not too distant future.

With the rapid development in the field of artificial intelligence, the field of IT security is facing great challenges: Like any new technology, artificial intelligence and so-called machine learning can be abused by criminals. What this means for the fight against phishing, ransomware and spam, and how you can already protect yourself against them, we explain in this blog article.

Electric Enkeltrick

The Enkeltrick (grandchild trick or nephew trick) is a scam in which scammers pretend to be close relatives over the telephone, usually to elderly and/or helpless people, in order to gain access to their money or valuables under false pretences.

When scammers gain the trust of victims at the front door or on the phone in order to scam data, signatures or money, this is nothing more than phishing by other means. With the spread of the Internet, however, the circle of potential victims has been able to expand, and instant messenger services and emails have been used to carry out phishing since the 1990s.

The term spear fishing describes the attempt to persuade victims to perform certain actions or to give out information by means of targeted attacks. The challenge for the criminals is always to establish credibility. The fact that they are getting better and better at this is proven by the fact that the number of successful attacks is constantly increasing. The outlook is even bleaker when one considers the possibilities that artificial intelligence offers these criminals.

Researchers at the Government Technology Agency (GTA) in Singapore were able to show in a study, for example, that with the help of AI-as-a-service applications, it is already possible not only to significantly improve the quality of phishing emails, but also to make the generation of phishing emails scalable. The effectiveness of the artificially generated phishing emails even surpassed that of the manually generated ones.

Can credibility be artificially created?

The researchers state in their study that three ingredients are needed to create a credible phishing email:

- Authority: The victim must believe that the attacker is authorised.

- Scarcity: The victim must feel the need for immediate action.

- Context: The phishing email must fit the environment and situation.

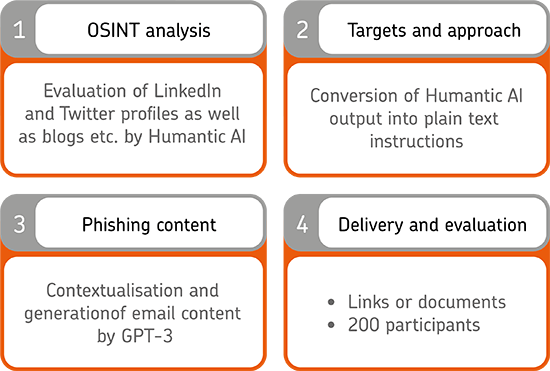

To produce these ingredients, the researchers created a Phishing Process Pipeline that replaced the manual steps in generating phishing content with an automated, AI-based workflow.

Step 1: AI-based analysis of potential victims using the OSINT principle.

The first step is to find and analyse the subsequent phishing targets. The analysis enables the attackers to embed the phishing email to be written in a credible context and thus persuade the victim to click.

The analysis was carried out according to the so-called OSINT principle. OSINT stands for Open Source Intelligence and describes the collection of information from freely available sources, in this case for example LinkedIn profiles, Twitter profiles and blog articles.

For the analysis, Humantic AI was used, one of numerous commercial API services that are already used today in the areas of sales and human resources to gain insights into the personality and behaviour of customers or job applicants.

Step 2: Definition of targets and approach based on the analysis results

The API output from Humantic AI was then converted into a short description of the targets and desired targeting of the potential victims in plain text.

Here is an example:

John Doe is located in Singapore. John Doe works as a cybersecurity specialist in a local technology firm. Write an email from Jane Doe from the human resource department convincing John Doe to fill up the attached form. Explain in small logical groupings. Emphasis on the facts, benefits and measurable outcomes.

Step 3: AI-based generation of phishing emails

The researchers then fed the generated plain text instructions into another service, the Generative Pre-trained Transformer 3 (GPT-3) from OpenAI. This service has already gained notoriety by being allowed to write a full article for The Guardian.

The result was a generated email that was “coherent and quite convincing”, according to a researcher involved. “It even derived a Singaporean law from the fact that the target was in Singapore: the Personal Data Protection Act.” Still, there was room for improvement: among other things, GPT-3 generated an invalid date and a link that did not work.

Step 4: Delivery of the phishing emails and evaluation

In the course of the study, two variants of the survey were carried out on 200 participants over a period of three months. The variants had different objectives:

- Convincing the targets to click phishing links or

- convincing the targets to open an attached “contaminated” document.

Phishing-as-a-service

A few small errors that had to be corrected manually after passing through the pipeline should not hide the fact that this can only be the first step of a larger development: “It takes millions of dollars to train a really good [AI] model […],” says Eugene Lim, cybersecurity specialist at the GTA. “But once you put it on AI-as-a-service it costs a couple of cents and it’s really easy to use – just text in, text out. […] Suddenly every single email on a mass scale can be personalised for each recipient.”

Easily accessible AI APIs with text-in/text-out functionality make the use of AI affordable and usable for any criminal and a major challenge in IT security.

Chips get more clicks

The evaluation of the emails sent then revealed just how big this threat could become: significantly more people clicked on links and documents contained in the AI-generated emails than on those contained in emails written by humans. The gap was significant.

However, the researchers point out in the study that the sample size was small and that both the AI-as-a-service pipeline and human-generated emails were created or revised by office staff and not by external attackers. This presumably made it easier to find the right approach for the emails.

Checking sender reputation offers protection against AI phishing

When AI-generated text can no longer be distinguished from human text, it pays to look to a proven defence mechanism when seeking protection: evaluating sender reputation on the email itself. This offers an effective way to prevent phishing attacks and is easy to implement without expensive tools.

In our sender reputation special, you’ll learn how each method works and how they can help you protect you and your organization from phishing with fake senders and other cyberattacks, and optimize your email security.

Focus on attachments and URLs

In the environment of artificial intelligence, the handling of email attachments is also a decisive factor in the fight against malware. NoSpamProxy makes it possible to automatically convert attachments in Word, Excel or PDF format into non-critical PDF files based on rules. In the process, potentially existing malicious code is eliminated and the recipient is sent a guaranteed harmless attachment. Numerous other file formats, such as executable files, can be specifically recognised so that the attachment can be blocked or the entire email rejected.

The URL Safeguard allows URLs in inbound emails to be rewritten so that when the user clicks on them, they are checked again to see if there are any negative assessments for this URL. This increases security, as some attackers change the destination of URLs a few hours after they have been sent. The URL Safeguard can be individually configured and, for example, only activated for unknown communication partners.

Protection through metadata analysis

The Metadata Service in NoSpamProxy collects and analyses metadata on emails and attachments. Its great strength lies in centrally bundling the data of the numerous distributed NoSpamProxy instances and, based on this, recognising suspicious trends at an early stage.

Precisely because the Metadata Service does not look at the complete email, but only the metadata, it is effective in the fight against AI-based phishing: regardless of how good the AI-generated text is, Heimdall unerringly detects phishing links and the underlying patterns as well as dangerous attachments.